Researchers in the field of AI are now afraid that AI will become a bhasmasuran. Because of replies to internet search queries Existing AI chatbots usually do the work of generating or recording things as ready-made answers to questions that come to it, but it now engages researchers in more advanced tasks, i.e. programming codes. , playing games, and inducing the chatbot to break up the marriages of its customers in the course of its work, researchers are baffled by the mysterious workings of AI, sometimes in villainous and sometimes benevolent ways.

1.”Unveiling the Mysterious World of AI: From Chatbots to Code Execution”

Chat GPT and Artificial Intelligence are going to change the world in a way that no one can grasp. Because nobody knows what’s going on inside them, some of these have capabilities far beyond what they were built or programmed for. This has puzzled researchers because the truth is that they have not been able to understand why. Some tests suggest that AI systems may have internally created a version of our physical world, similar to how our brains work, but the machine’s approach may be different. “If we can’t understand how they work, what we need to do is make them better and safer,” says Ellie Pavlick, one of the researchers working in the field at Brown University.

He and his colleagues are working in the field of GPT (generative pre-trained transformer) development. Apart from these, they are also very well-versed in LLMs, which are more advanced machine language models. A large language model (LLM) is a type of artificial intelligence (AI) algorithm used to understand new material, as well as summarize, generate, and predict it. Using deep learning techniques and large data sets. The term generative AI is also closely related to LLMs, in fact, it is a type of generative AI specifically designed to help create text-based content. (A supervised learning algorithm that uses an ensemble learning method for regression. An ensemble learning method is a technique that combines predictions from multiple machine learning algorithms to produce more accurate predictions than a single model.) These machine learning models are all connected to a machine learning system called a neural network, Such networks have a structure modelled after interconnected neurons in the human brain. The codes of all these programs are relatively simple and compact, as it sets up a self-correcting algorithm that selects the most likely word to complete a passage based on an analysis of hundreds of gigabytes of Internet text. Through more self-training, the system puts its results into chat form.

In this sense, it merely reproduces what it has learned – in the words of Emily Bender, a linguist at the University of Washington, it is “a machine parrot with a difference”. But one of the most baffling and mildly frightening things about this is that these LLMs pass the bar exam in its testing phase, explain the Higgs boson in iambic pentameter, and attempt to destroy their users’ marriages as chatbots. This type is wide No one expected this self-developing and self-correcting algorithm to acquire competence, thought to act only as it was programmed to acquire competence. The fact that GPT and other AI systems are even performing tasks they were not trained to do, i.e. they are gaining “emergent skills,” has surprised even researchers who were generally sceptical of the hype about LLMs. “”I don’t know how they do it, or if they can do it like humans do – but they were beyond my imagination. ” says Melanie Mitchell, an AI researcher at the Santa Fe Institute. “It certainly produced a parrot that hummed like that”Not like, it’s definitely creating an image of the world within itself—I don’t think it’s like the imaginary world that humans create in their minds,” says Yoshua Bengio, an AI researcher at the University of Montreal.

2.”AI’s Emergent Skills: Beyond Programming and Predictions”

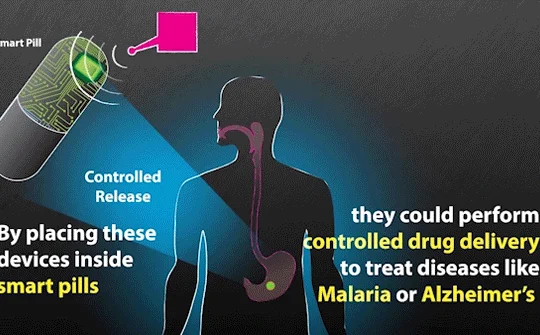

At a conference at New York University in March, Columbia University philosopher Raphael Millier offered another example of what wonders LLMs can do. Models with the ability to write computer code have already emerged, which is impressive, but not surprising, since there is plenty of code available on the Internet for them to copy. Millier also says that GPT has also shown that it can execute code, and that the philosopher typed in a program to calculate the 83rd number in the Fibonacci sequence. “It’s a very high level of multistep reasoning,” he says, as the bot did. But when Millier asked directly for the 83rd Fibonacci number, GPT got it wrong: suggesting that the system wasn’t just Internet parrots. That means it was doing its own calculations to arrive at the correct answer. Although an LLM works on a computer, it is not a computer. It lacks essential computational components such as working memory. Realizing that GPT can’t run code on its own, its inventor, the tech company OpenAI, has introduced a special plug-in—a tool that ChatGPT can use when answering a question. But that plug-in was not used in Milliere’s demonstration. Instead, he hypothesized that the machine made a self-improvement in its memory by using mechanisms to interpret words according to their context. It is a similar way that nature improves its ability to cope with new situations. This spontaneous ability demonstrates that LLMs develop an intrinsic sophistication beyond shallow statistical analyses.v

3.”Unlocking the Secrets of AI’s Self-Improvement and Memory”

The researchers found that through these things, the machine gains a true understanding of what it has learned. In a study presented at the International Conference on Learning Representations (ICLR), Harvard University doctoral student Kenneth Lee and his AI research colleagues — Aspen K. David Bau, Fernanda Vigas, Hanspeter Pfister, and Martin Wattenberg of Northeastern University at Hopkins—built their own miniature version of the GPT neural network. So that they can study its inner workings. They trained the board game Othello by giving it a long input sequence of millions of matches of moves in text form.Now their model is almost a perfect player.

At a conference at New York University, Columbia University philosopher Raphael Millier gave another example of what wonders LLMs can do. There are already models out there with the ability to write computer code, which is impressive, but not surprising. Because there are many codes available on the internet to copy them. Millier also says that GPT has also shown that it can execute code and that the philosopher typed in a program to calculate the 83rd number in the Fibonacci sequence. “This is a very high level of multistep reasoning,” He says the bot did it. But when Millier asked directly for the 83rd Fibonacci number, GPT got it wrong: this suggests that the system wasn’t just parroting the Internet, meaning it was doing its own calculations to arrive at the correct answer.

Although an LLM works on a computer, it is not a computer. It lacks essential computational components such as working memory. Realizing that GPT can’t run code on its own, its inventor, the tech company OpenAI, has introduced a special plug-in—a tool that ChatGPT can use when answering a question. . But that plug-in was not used in Milliere’s demonstration. Instead, he hypothesized that the machine made a self-improvement in its memory by using mechanisms to interpret words according to their context. – A similar method for nature to improve its ability to cope with new situations. This spontaneous ability demonstrates that LLMs develop an intrinsic sophistication beyond shallow statistical analyses. The researchers found that through these things, the machine gains a true understanding of what it has learned.

4.Challenges and Marvels of AI Understanding: A Research Perspective”

In a study presented at the International Conference on Learning Representations (ICLR), Harvard University doctoral student Kenneth Lee and his AI research colleagues – Aspen K. David Bau, Fernanda Vigas, Hanspeter Pfister, and Martin Wattenberg of Northeastern University—at Hopkins—created their own miniature replica of the GPT neural network so they could study its inner workings. They trained the board game Othello by giving it a long input sequence of millions of matches of moves in text form. Now their model is almost a perfect player.

To study how neural networks encode information, they adopted a technique devised in 2016 by Bengio and Gilliam Alain of the University of Montreal. They created a miniature “probe” network to analyze key network layers layer by layer. Lee compares this approach to neuroscience methods. “It’s similar to us putting an electrical probe into the human brain,” He says. In the case of AI, the investigation found that its “neural activity” matched the response of an Othello game board. To confirm this, the researchers reversed the probe to implant information into the network—for example, replacing one of the game’s black marker pieces with a white one. “Basically, we hacked the brains of these language models,” Li says. Adjusted its network moves accordingly. By doing this, the researchers concluded that it was playing the Othello game in a similar way to humans: the AI kept a game board in its “mind’s eye” and used this model to make moves. Li says he thinks it teaches itself to achieve this skill by using a hypothetical form of its training data. “If you’re given a lot of game scripts, trying to figure out the rule behind it is the best way to compress,” he adds. This ability to infer the structure of the external world is not limited to simple game-playing moves; It also shows in the dialogue.

Belinda Li, Maxwell Nye, Jacob Andreas, and many others at MIT have studied networks playing text-based adventure games. They were fed sentences such as “The key is in the treasure chest” and then “You take the key”. In a later study, They found that the networks encoded variables related to “treasure box” and “you”, each with the properties of holding a key or not, and updated these variables sentence by sentence. The system had no independent way of knowing what a box or key was, yet it picked up the concepts necessary for this task. “There’s some representation of situations, hidden inside the model,” says Belinda Li.

For more latest AI news visit us.

3 Comments