Okay, let’s talk about Google’s Nano Banana — yes, that’s actually what it’s called. Weird name aside, this little thing might soon become one of the most interesting AI tools hiding inside your phone.

So here’s the deal. Nano Banana is part of Google’s Gemini 2.5 Flash Image model — basically, an AI tool that can create images from text prompts and edit photos with scary good precision. You tell it what you want — a cleaner sky, a new background, a missing object filled in — and it just does it.

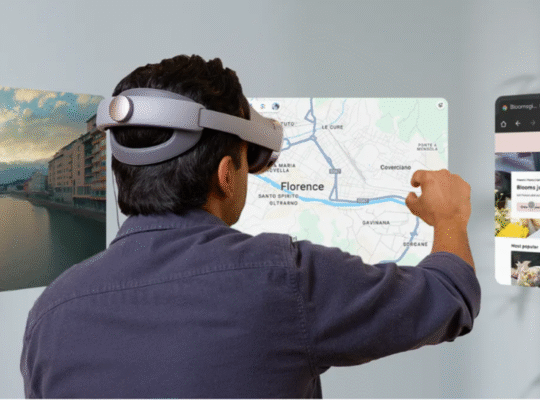

Until now, it’s been kind of tucked away in testing. But according to a new report from Android Authority, Google seems to be gearing up to roll it out across its biggest apps — including Google Lens and Circle to Search.

That means instead of bouncing between editing apps or AI generators, you might soon be able to draw a circle around something in a photo or search window and instantly tell Google to “fix this,” “add that,” or “make it look better.”

Pretty wild, right?

What’s Happening Behind the Scenes

Android Authority dug into the backend of the Google Android app (version 16.40.18.sa.arm64) and found some new experimental features. One of them brings back the Live option in Google Lens, along with a new Create button — powered by Nano Banana.

They also spotted signs that Google is testing Nano Banana integrations in Search and Translate, though those are still in early stages.

As for Circle to Search, the feature that lets you doodle or circle something on your screen and search for it instantly, Nano Banana might soon play a role there too. The feature apparently already shows a “Create” option in development versions of the app, but it doesn’t do anything yet. Translation: Google’s testing it quietly, behind the curtain.

And while Google hasn’t officially said a word, one tiny clue came straight from the top. Rajan Patel, Google’s VP of Search Engineering (and the guy who co-founded Lens), reposted the Android Authority article on X with the caption:

“Keep your 👀 peeled 🍌.”

So yeah — that’s basically a wink and a nod.

Why People Are Excited About Nano Banana

Here’s the thing — people who’ve actually used Nano Banana love it. There’s already a small but growing crowd on Reddit calling it “extremely good” and “super useful.”

And it’s not hard to see why. Instead of being some massive cloud-only AI tool, Nano Banana seems designed for speed, simplicity, and instant results — the kind of thing you can use on the fly.

If Google really does roll this out to Lens, Search, and Translate, you could soon edit photos, generate new visuals, and search contextually without ever leaving the app you’re in.

Imagine circling a product in a photo and asking Google to “show me this in black,” or snapping a landmark and asking it to “create a similar image at sunset.” That’s where things are headed.

The Bigger Picture

Google has been slowly blending AI into almost everything it makes — but Nano Banana feels different. It’s not about answering questions or writing text; it’s about seeing and creating.

It’s part of this larger trend where AI is no longer just a chatbot — it’s becoming part of your phone’s natural workflow. Editing, searching, translating… all happening together, powered by the same smart system under the hood.

And if that “keep your eyes peeled 🍌” hint is anything to go by, we won’t have to wait too long before Nano Banana shows up somewhere inside your Google apps.

When it does, it might quietly change how you interact with your photos, your camera, and even your search results — one small banana at a time. 🍌

Also Read: Perplexity CEO Says New AI Browser ‘Comet’ Could Reduce the Need for Additional Hires

Also Read: Google Gemini’s Nano Banana and Vintage Saree Images Are Going Viral, But Here’s the Dark Side…